Part II: Information Systems for Strategic Advantage

Chapter 10: Information Systems Development

Learning Objectives

Upon successful completion of this chapter, you will be able to:

- Explain the overall process of developing new software;

- Explain the differences between software development methodologies;

- Understand the different types of programming languages used to develop software;

- Understand some of the issues surrounding the development of websites and mobile applications; and

- Identify the four primary implementation policies.

Introduction

When someone has an idea for a new function to be performed by a computer, how does that idea become reality? If a company wants to implement a new business process and needs new hardware or software to support it, how do they go about making it happen? This chapter covers the different methods of taking those ideas and bringing them to reality, a process known as information systems development.

Programming

Software is created via programming, as discussed in Chapter 2. Programming is the process of creating a set of logical instructions for a digital device to follow using a programming language. The process of programming is sometimes called “coding” because the developer takes the design and encodes it into a programming language which then runs on the computer.

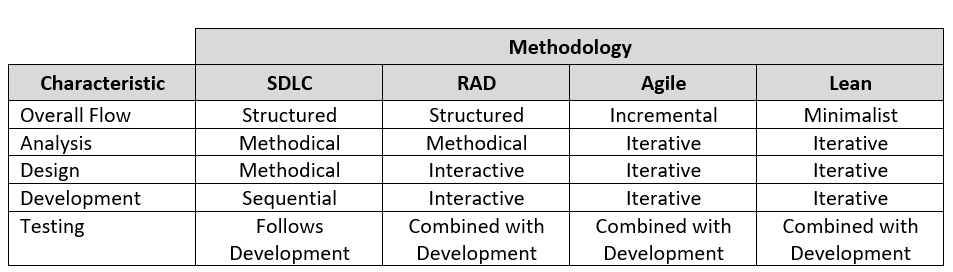

The process of developing good software is usually not as simple as sitting down and writing some code. Sometimes a programmer can quickly write a short program to solve a need, but in most instances the creation of software is a resource-intensive process that involves several different groups of people in an organization. In order to do this effectively, the groups agree to follow a specific software development methodology. The following sections review several different methodologies for software development, as summarized in the table below and more fully described in the following sections.

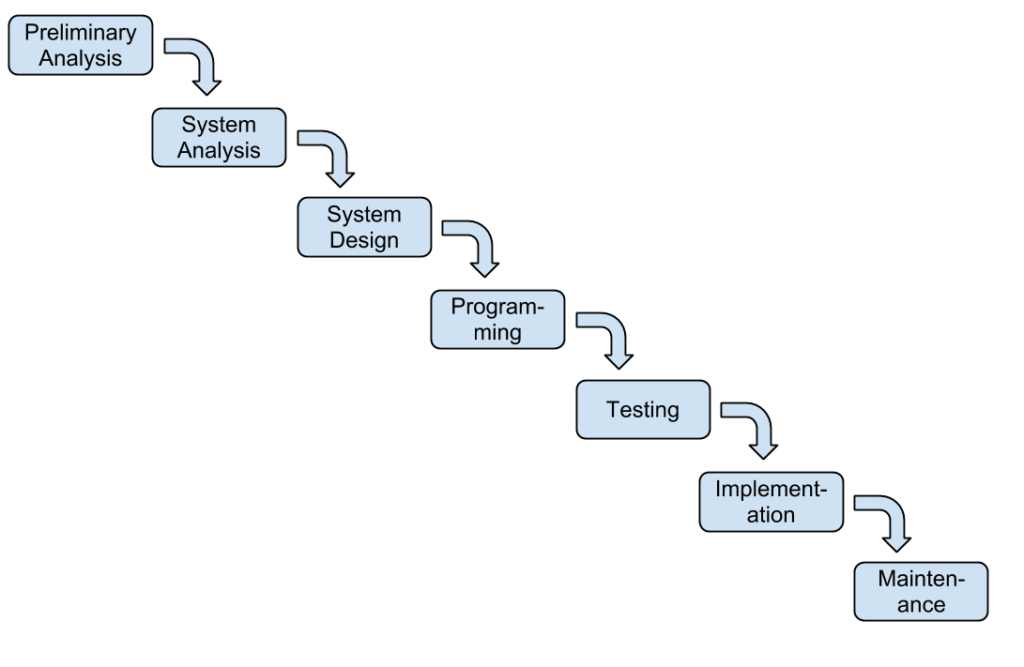

Systems Development Life Cycle

The Systems Development Life Cycle (SDLC) was first developed in the 1960s to manage the large software projects associated with corporate systems running on mainframes. This approach to software development is very structured and risk averse, designed to manage large projects that include multiple programmers and systems that have a large impact on the organization. It requires a clear, upfront understanding of what the software is supposed to do and is not amenable to design changes. This approach is roughly similar to an assembly line process, where it is clear to all stakeholders what the end product should do and that major changes are difficult and costly to implement.

Various definitions of the SDLC methodology exist, but most contain the following phases.

- Preliminary Analysis. A request for a replacement or new system is first reviewed. The review includes questions such as: What is the problem-to-be-solved? Is creating a solution possible? What alternatives exist? What is currently being done about it? Is this project a good fit for our organization? After addressing these question, a feasibility study is launched. The feasibility study includes an analysis of the technical feasibility, the economic feasibility or affordability, and the legal feasibility. This step is important in determining if the project should be initiated and may be done by someone with a title of Requirements Analyst or Business Analyst

- System Analysis. In this phase one or more system analysts work with different stakeholder groups to determine the specific requirements for the new system. No programming is done in this step. Instead, procedures are documented, key players/users are interviewed, and data requirements are developed in order to get an overall impression of exactly what the system is supposed to do. The result of this phase is a system requirements document and may be done by someone with a title of Systems Analyst

- System Design. In this phase, a designer takes the system requirements document created in the previous phase and develops the specific technical details required for the system. It is in this phase that the business requirements are translated into specific technical requirements. The design for the user interface, database, data inputs and outputs, and reporting are developed here. The result of this phase is a system design document. This document will have everything a programmer needs to actually create the system and may be done by someone with a title of Systems Analyst, Developer, or Systems Architect, based on the scale of the project.

- Programming. The code finally gets written in the programming phase. Using the system design document as a guide, programmers develop the software. The result of this phase is an initial working program that meets the requirements specified in the system analysis phase and the design developed in the system design phase. These tasks are done by persons with titles such as Developer, Software Engineer, Programmer, or Coder.

- Testing. In the testing phase the software program developed in the programming phase is put through a series of structured tests. The first is a unit test, which evaluates individual parts of the code for errors or bugs. This is followed by a system test in which the different components of the system are tested to ensure that they work together properly. Finally, the user acceptance test allows those that will be using the software to test the system to ensure that it meets their standards. Any bugs, errors, or problems found during testing are resolved and then the software is tested again. These tasks are done by persons with titles such as Tester, Testing Analyst, or Quality Assurance.

- Implementation. Once the new system is developed and tested, it has to be implemented in the organization. This phase includes training the users, providing documentation, and data conversion from the previous system to the new system. Implementation can take many forms, depending on the type of system, the number and type of users, and how urgent it is that the system become operational. These different forms of implementation are covered later in the chapter.

- Maintenance. This final phase takes place once the implementation phase is complete. In the maintenance phase the system has a structured support process in place. Reported bugs are fixed and requests for new features are evaluated and implemented. Also, system updates and backups of the software are made for each new version of the program. Since maintenance is normally an Operating Expense (OPEX) while much of development is a Capital Expense (CAPEX), funds normally come out of different budgets or cost centers.

The SDLC methodology is sometimes referred to as the waterfall methodology to represent how each step is a separate part of the process. Only when one step is completed can another step begin. After each step an organization must decide when to move to the next step. This methodology has been criticized for being quite rigid, allowing movement in only one direction, namely, forward in the cycle. For example, changes to the requirements are not allowed once the process has begun. No software is available until after the programming phase.

Again, SDLC was developed for large, structured projects. Projects using SDLC can sometimes take months or years to complete. Because of its inflexibility and the availability of new programming techniques and tools, many other software development methodologies have been developed. Many of these retain some of the underlying concepts of SDLC, but are not as rigid.

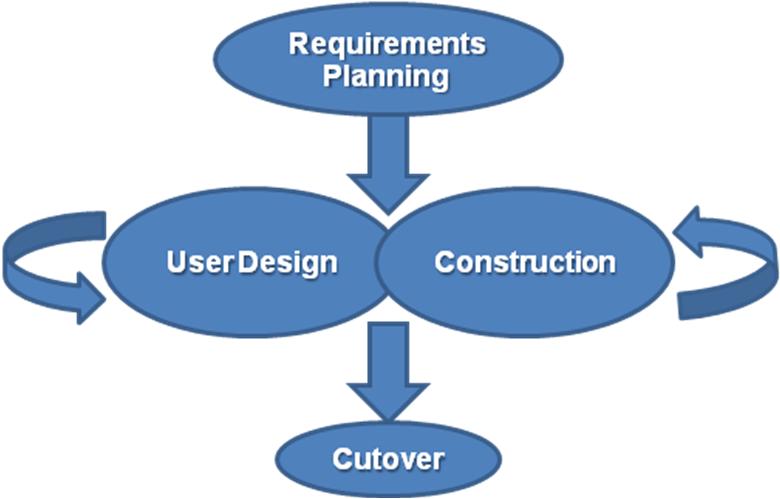

Rapid Application Development

Rapid Application Development (RAD) focuses on quickly building a working model of the software, getting feedback from users, and then using that feedback to update the working model. After several iterations of development, a final version is developed and implemented.

The RAD methodology consists of four phases.

- Requirements Planning. This phase is similar to the preliminary analysis, system analysis, and design phases of the SDLC. In this phase the overall requirements for the system are defined, a team is identified, and feasibility is determined.

- User Design. In the user design phase representatives of the users work with the system analysts, designers, and programmers to interactively create the design of the system. Sometimes a Joint Application Development (JAD) session is used to facilitate working with all of these various stakeholders. A JAD session brings all of the stakeholders for a structured discussion about the design of the system. Application developers also participate and observe, trying to understand the essence of the requirements.

- Construction. In the construction phase the application developers, working with the users, build the next version of the system through an interactive process. Changes can be made as developers work on the program. This step is executed in parallel with the User Design step in an iterative fashion, making modifications until an acceptable version of the product is developed.

- Cutover. Cutover involves switching from the old system to the new software. Timing of the cutover phase is crucial and is usually done when there is low activity. For example, IT systems in higher education undergo many changes and upgrades during the summer or between fall semester and spring semester. Approaches to the migration from the old to the new system vary between organizations. Some prefer to simply start the new software and terminate use of the old software. Others choose to use an incremental cutover, bringing one part online at a time. A cutover to a new accounting system may be done one module at a time such as general ledger first, then payroll, followed by accounts receivable, etc. until all modules have been implemented. A third approach is to run both the old and new systems in parallel, comparing results daily to confirm the new system is accurate and dependable. A more thorough discussion of implementation strategies appears near the end of this chapter.

As you can see, the RAD methodology is much more compressed than SDLC. Many of the SDLC steps are combined and the focus is on user participation and iteration. This methodology is much better suited for smaller projects than SDLC and has the added advantage of giving users the ability to provide feedback throughout the process. SDLC requires more documentation and attention to detail and is well suited to large, resource-intensive projects. RAD makes more sense for smaller projects that are less resource intensive and need to be developed quickly.

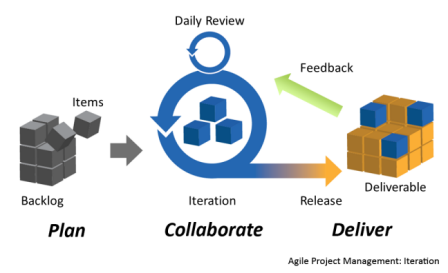

Agile Methodologies

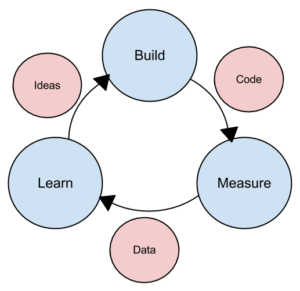

Agile methodologies are a group of methodologies that utilize incremental changes with a focus on quality and attention to detail. Each increment is released in a specified period of time (called a time box), creating a regular release schedule with very specific objectives. While considered a separate methodology from RAD, the two methodologies share some of the same principles such as iterative development, user interaction, and flexibility to change. The agile methodologies are based on the “Agile Manifesto,” first released in 2001.

Agile and Iterative Development

The diagram above emphasizes iterations in the center of agile development. You should notice how the building blocks of the developing system move from left to right, a block at a time, not the entire project. Blocks that are not acceptable are returned through feedback and the developers make the needed modifications. Finally, notice the Daily Review at the top of the diagram. Agile Development means constant evaluation by both developers and customers (notice the term “Collaboration”) of each day’s work.

The characteristics of agile methodology include:

- Small cross-functional teams that include development team members and users;

- Daily status meetings to discuss the current state of the project;

- Short time-frame increments (from days to one or two weeks) for each change to be completed; and

- Working project at the end of each iteration which demonstrates progress to the stakeholders.

The goal of agile methodologies is to provide the flexibility of an iterative approach while ensuring a quality product.

Lean Methodology

One last methodology to discuss is a relatively new concept taken from the business bestseller The Lean Startup by Eric Reis. Lean focuses on taking an initial idea and developing a Minimum Viable Product (MVP). The MVP is a working software application with just enough functionality to demonstrate the idea behind the project. Once the MVP is developed, the development team gives it to potential users for review. Feedback on the MVP is generated in two forms. First, direct observation and discussion with the users and second, usage statistics gathered from the software itself. Using these two forms of feedback, the team determines whether they should continue in the same direction or rethink the core idea behind the project, change the functions, and create a new MVP. This change in strategy is called a pivot. Several iterations of the MVP are developed, with new functions added each time based on the feedback, until a final product is completed.

The biggest difference between the iterative and non-iterative methodologies is that the full set of requirements for the system are not known when the project is launched. As each iteration of the project is released, the statistics and feedback gathered are used to determine the requirements. The lean methodology works best in an entrepreneurial environment where a company is interested in determining if their idea for a program is worth developing.

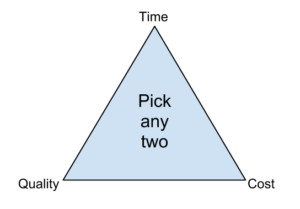

Sidebar: The Quality Triangle

When developing software or any sort of product or service, there exists a tension between the developers and the different stakeholder groups such as management, users, and investors. This tension relates to how quickly the software can be developed (time), how much money will be spent (cost), and how well it will be built (quality). The quality triangle is a simple concept. It states that for any product or service being developed, you can only address two of the following: time, cost, and quality.

So why can only two of the three factors in the triangle be considered? Because each of these three components are in competition with each other! If you are willing and able to spend a lot of money, then a project can be completed quickly with high quality results because you can provide more resources towards its development. If a project’s completion date is not a priority, then it can be completed at a lower cost with higher quality results using a smaller team with fewer resources. Of course, these are just generalizations, and different projects may not fit this model perfectly. But overall, this model is designed to help you understand the trade-offs that must be made when you are developing new products and services.

There are other, fundamental reasons why low-cost, high-quality projects done quickly are so difficult to achieve.

- The human mind is analog and the machines the software run on are digital. These are completely different natures that depend upon context and nuance versus being a 1 or a 0. Things that seem obvious to the human mind are not so obvious when forced into a 1 or 0 binary choice.

- Human beings leave their imprints on the applications or systems they design. This is best summed up by Conway’s Law (1968) – “Organizations that design information systems are constrained to do so in a way that mirrors their internal communication processes.” Organizations with poor communication processes will find it very difficult to communicate requirements and priorities, especially for projects at the enterprise level (i.e., that affect the whole organization.

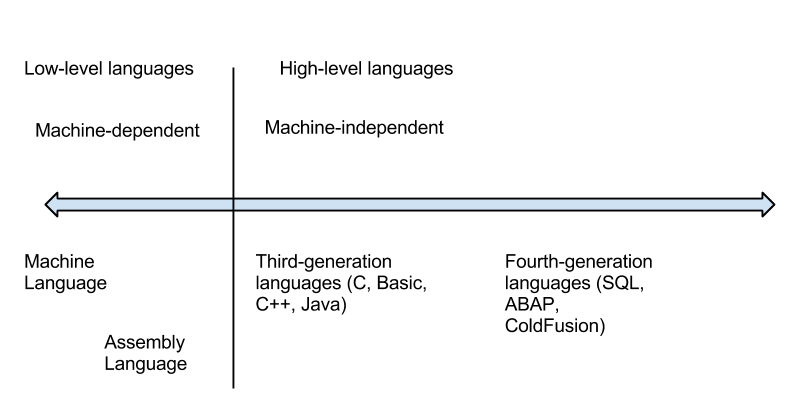

Programming Languages

As noted earlier, developers create programs using one of several programming languages. A programming language is an artificial language that provides a way for a developer to create programming code to communicate logic in a format that can be executed by the computer hardware. Over the past few decades, many different types of programming languages have evolved to meet a variety of needs. One way to characterize programming languages is by their “generation.”

Generations of Programming Languages

Early languages were specific to the type of hardware that had to be programmed. Each type of computer hardware had a different low level programming language. In those early languages very specific instructions had to be entered line by line – a tedious process.

First generation languages were called machine code because programming was done in the format the machine/computer could read. So programming was done by directly setting actual ones and zeroes (the bits) in the program using binary code. Here is an example program that adds 1234 and 4321 using machine language:

10111001 00000000 11010010 10100001 00000100 00000000 10001001 00000000 00001110 10001011 00000000 00011110 00000000 00011110 00000000 00000010 10111001 00000000 11100001 00000011 00010000 11000011 10001001 10100011 00001110 00000100 00000010 00000000

Assembly language is the second generation language and uses English-like phrases rather than machine-code instructions, making it easier to program. An assembly language program must be run through an assembler, which converts it into machine code. Here is a sample program that adds 1234 and 4321 using assembly language.

MOV CX,1234 MOV DS:[0],CX MOV CX,4321 MOV AX,DS:[0] MOV BX,DS:[2] ADD AX,BX MOV DS:[4],AX

Third-generation languages are not specific to the type of hardware on which they run and are similar to spoken languages. Most third generation languages must be compiled. The developer writes the program in a form known generically as source code, then the compiler converts the source code into machine code, producing an executable file. Well-known third generation languages include BASIC, C, Python, and Java. Here is an example using BASIC:

A=1234 B=4321 C=A+B END

Fourth generation languages are a class of programming tools that enable fast application development using intuitive interfaces and environments. Many times a fourth generation language has a very specific purpose, such as database interaction or report-writing. These tools can be used by those with very little formal training in programming and allow for the quick development of applications and/or functionality. Examples of fourth-generation languages include: Clipper, FOCUS, SQL, and SPSS.

Why would anyone want to program in a lower level language when they require so much more work? The answer is similar to why some prefer to drive manual transmission vehicles instead of automatic transmission, namely, control and efficiency. Lower level languages, such as assembly language, are much more efficient and execute much more quickly. The developer has finer control over the hardware as well. Sometimes a combination of higher and lower level languages is mixed together to get the best of both worlds. The programmer can create the overall structure and interface using a higher level language but use lower level languages for the parts of the program that are used many times, require more precision, or need greater speed.

Compiled vs. Interpreted

Besides identifying a programming language based on its generation, we can also classify it through the distinction of whether it is compiled or interpreted. A computer language is written in a human-readable form. In a compiled language the program code is translated into a machine-readable form called an executable that can be run on the hardware. Some well-known compiled languages include C, C++, and COBOL.

Interpreted languages require a runtime program to be installed in order to execute. Each time the user wants to run the software the runtime program must interpret the program code line by line, then run it. Interpreted languages are generally easier to work with but also are slower and require more system resources. Examples of popular interpreted languages include BASIC, PHP, PERL, and Python. The web languages of HTML and JavaScript are also considered interpreted because they require a browser in order to run.

The Java programming language is an interesting exception to this classification, as it is actually a hybrid of the two. A program written in Java is partially compiled to create a program that can be understood by the Java Virtual Machine (JVM). Each type of operating system has its own JVM which must be installed before any program can be executed. The JVM approach allows a single Java program to run on many different types of operating systems.

Procedural vs. Object-Oriented

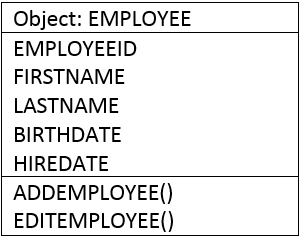

A procedural programming language is designed to allow a programmer to define a specific starting point for the program and then execute sequentially. All early programming languages worked this way. As user interfaces became more interactive and graphical, it made sense for programming languages to evolve to allow the user to have greater control over the flow of the program. An object-oriented programming language is designed so that the programmer defines “objects” that can take certain actions based on input from the user. In other words, a procedural program focuses on the sequence of activities to be performed while an object oriented program focuses on the different items being manipulated.

Consider a human resources system where an “EMPLOYEE” object would be needed. If the program needed to retrieve or set data regarding an employee, it would first create an employee object in the program and then set or retrieve the values needed. Every object has properties, which are descriptive fields associated with the object. Also known as a Schema, it is the logical view of the object (i.e., each row of properties represents a column in the actual table, which is known as the physical view). The employee object has the properties “EMPLOYEEID”, “FIRSTNAME”, “LASTNAME”, “BIRTHDATE” and “HIREDATE”. An object also has methods which can take actions related to the object. There are two methods in the example. The first is “ADDEMPLOYEE()”, which will create another employee record. The second is “EDITEMPLOYEE()” which will modify an employee’s data.

Programming Tools

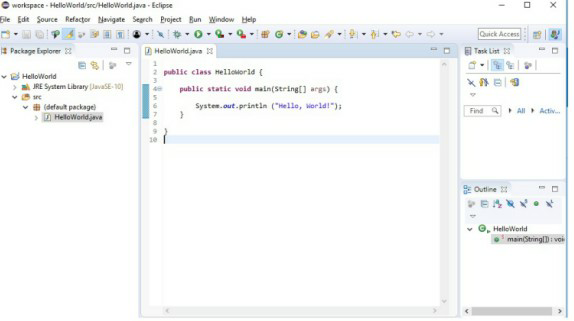

To write a program, you need little more than a text editor and a good idea. However, to be productive you must be able to check the syntax of the code, and, in some cases, compile the code. To be more efficient at programming, additional tools, such as an Integrated Development Environment (IDE) or computer-aided software-engineering (CASE) tools can be used.

Integrated Development Environment

For most programming languages an Integrated Development Environment (IDE) can be used to develop the program. An IDE provides a variety of tools for the programmer, and usually includes:

- Editor. An editor is used for writing the program. Commands are automatically color coded by the IDE to identify command types. For example, a programming comment might appear in green and a programming statement might appear in black.

- Help system. A help system gives detailed documentation regarding the programming language.

- Compiler/Interpreter. The compiler/interpreter converts the programmer’s source code into machine language so it can be executed/run on the computer.

- Debugging tool. Debugging assists the developer in locating errors and finding solutions.

- Check-in/check-out mechanism. This tool allows teams of programmers to work simultaneously on a program without overwriting another programmer’s code.

Examples of IDEs include Microsoft’s Visual Studio and Oracle’s Eclipse. Visual Studio is the IDE for all of Microsoft’s programming languages, including Visual Basic, Visual C++, and Visual C#. Eclipse can be used for Java, C, C++, Perl, Python, R, and many other languages.

CASE Tools

While an IDE provides several tools to assist the programmer in writing the program, the code still must be written. Computer-Aided Software Engineering (CASE) tools allow a designer to develop software with little or no programming. Instead, the CASE tool writes the code for the designer. CASE tools come in many varieties. Their goal is to generate quality code based on input created by the designer.

Sidebar: Building a Website

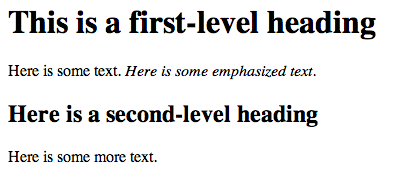

In the early days of the World Wide Web, the creation of a website required knowing how to use HyperText Markup Language (HTML). Today most websites are built with a variety of tools, but the final product that is transmitted to a browser is still HTML. At its simplest HTML is a text language that allows you to define the different components of a web page. These definitions are handled through the use of HTML tags with text between the tags or brackets. For example, an HTML tag can tell the browser to show a word in italics, to link to another web page, or to insert an image. The HTML code below selects two different types of headings (h1 and h2) with text below each heading. Some of the text has been italicized. The output as it would appear in a browser is shown after the HTML code.

<h1>This is a first-level heading</h1> Here is some text. <em>Here is some emphasized text.</em> <h2>Here is a second-level heading</h2) Here is some more text.

HTML code

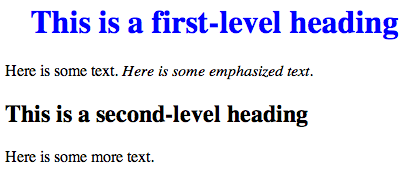

While HTML is used to define the components of a web page, Cascading Style Sheets (CSS) are used to define the styles of the components on a page. The use of CSS allows the style of a website to be set and stay consistent throughout. For example, a designer who wanted all first-level headings (h1) to be blue and centered could set the “h1″ style to match. The following example shows how this might look.

<style>

h1

{

color:blue;

text-align:center;

}

</style>

<h1>This is a first-level heading</h1>

Here is some text. <em>Here is some emphasized text.</em>

<h2>Here is a second-level heading</h2)

Here is some more text.

HTML code with CSS added

The combination of HTML and CSS can be used to create a wide variety of formats and designs and has been widely adopted by the web design community. The standards for HTML are set by a governing body called the World Wide Web Consortium. The current version of HTML 5 includes new standards for video, audio, and drawing.

When developers create a website, they do not write it out manually in a text editor. Instead, they use web design tools that generate the HTML and CSS for them. Tools such as Adobe Dreamweaver allow the designer to create a web page that includes images and interactive elements without writing a single line of code. However, professional web designers still need to learn HTML and CSS in order to have full control over the web pages they are developing.

Sidebar: Building a Mobile App

In many ways building an application for a mobile device is exactly the same as building an application for a traditional computer. Understanding the requirements for the application, designing the interface, and working with users are all steps that still need to be carried out.

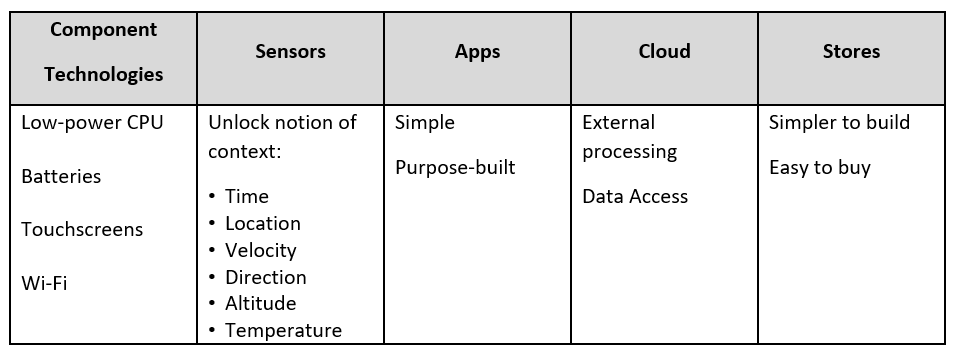

Mobile Apps

So what’s different about building an application for a mobile device? There are five primary differences:

- Breakthroughs in component technologies. Mobile devices require multiple components that are not only smaller but more energy-efficient than those in full-size computers (laptops or desktops). For example, low-power CPUs combined with longer-life batteries, touchscreens, and Wi-Fi enable very efficient computing on a phone, which needs to do much less actual processing than their full-size counterparts.

- Sensors have unlocked the notion of context. The combination of sensors like GPS, gyroscopes, and cameras enables devices to be aware of things like time, location, velocity, direction, altitude, attitude, and temperature. Location in particular provides a host of benefits.

- Simple, purpose-built, task-oriented apps are easy to use. Mobile apps are much narrower in scope than enterprise software and therefore easier to use. Likewise, they need to be intuitive and not require any training.

- Immediate access to data extends the value proposition. In addition to the app providing a simpler interface on the front end, cloud-based data services provide access to data in near real-time, from virtually anywhere (e.g., banking, travel, driving directions, and investing). Having access to the cloud is needed to keep mobile device size and power use down.

- App stores have simplified acquisition. Developing, acquiring, and managing apps has been revolutionized by app stores such as Apple’s App Store and Google Play. Standardized development processes and app requirements allow developers outside Apple and Google to create new apps with a built-in distribution channel. Average low app prices (including many of which that are free) has fueled demand.

In sum, the differences between building a mobile app and other types of software development look like this:

Building a mobile app for both iOS and Android operating systems is known as cross platform development. There are a number of third-party toolkits available for creating your app. Many will convert existing code such as HTML5, JavaScript, Ruby, C++, etc. However, if your app requires sophisticated programming, a cross platform developer kit may not meet your needs.

Responsive Web Design (RWD) focuses on making web pages render well on every device: desktop, laptop, tablet, smartphone. Through the concept of fluid layout RWD automatically adjusts the content to the device on which it is being viewed. You can find out more about responsive design here.

Build vs. Buy

When an organization decides that a new program needs to be developed, they must determine if it makes more sense to build it themselves or to purchase it from an outside company. This is the “build vs. buy” decision.

There are many advantages to purchasing software from an outside company. First, it is generally less expensive to purchase software than to build it. Second, when software is purchased, it is available much more quickly than if the package is built in-house. Software can take months or years to build. A purchased package can be up and running within a few days. Third, a purchased package has already been tested and many of the bugs have already been worked out. It is the role of a systems integrator to make various purchased systems and the existing systems at the organization work together.

There are also disadvantages to purchasing software. First, the same software you are using can be used by your competitors. If a company is trying to differentiate itself based on a business process incorporated into purchased software, it will have a hard time doing so if its competitors use the same software. Another disadvantage to purchasing software is the process of customization. If you purchase software from a vendor and then customize it, you will have to manage those customizations every time the vendor provides an upgrade. This can become an administrative headache, to say the least.

Even if an organization determines to buy software, it still makes sense to go through the same analysis as if it was going to be developed. This is an important decision that could have a long-term strategic impact on the organization.

Web Services

Chapter 3 discussed how the move to cloud computing has allowed software to be viewed as a service. One option, known as web services, allows companies to license functions provided by other companies instead of writing the code themselves. Web services can greatly simplify the addition of functionality to a website.

Suppose a company wishes to provide a map showing the location of someone who has called their support line. By utilizing Google Maps API web services, the company can build a Google Map directly into their application. Or a shoe company could make it easier for its retailers to sell shoes online by providing a shoe sizing web service that the retailers could embed right into their website.

Web services can blur the lines between “build vs. buy.” Companies can choose to build an application themselves but then purchase functionality from vendors to supplement their system.

End-User Computing (EUC)

In many organizations application development is not limited to the programmers and analysts in the information technology department. Especially in larger organizations, other departments develop their own department-specific applications. The people who build these applications are not necessarily trained in programming or application development, but they tend to be adept with computers. A person who is skilled in a particular program, such as a spreadsheet or database package, may be called upon to build smaller applications for use by their own department. This phenomenon is referred to as end-user development, or end-user computing.

End-user computing can have many advantages for an organization. First, it brings the development of applications closer to those who will use them. Because IT departments are sometimes backlogged, it also provides a means to have software created more quickly. Many organizations encourage end-user computing to reduce the strain on the IT department.

End-user computing does have its disadvantages as well. If departments within an organization are developing their own applications, the organization may end up with several applications that perform similar functions, which is inefficient, since it is a duplication of effort. Sometimes these different versions of the same application end up providing different results, bringing confusion when departments interact. End-user applications are often developed by someone with little or no formal training in programming. In these cases, the software developed can have problems that then have to be resolved by the IT department.

End-user computing can be beneficial to an organization provided it is managed. The IT department should set guidelines and provide tools for the departments who want to create their own solutions. Communication between departments can go a long way towards successful use of end-user computing.

Sidebar: Risks of EUC’s as “Shadow IT”

The Federal Home Loan Mortgage Company, better known as Freddie Mac, was fined over $100 million in 2003 in part for understating its earnings. This triggered a large-scale project to restate its financials, which involved automating financial reporting to comply with the Sarbanes-Oxley Act of 2002. Part of the restatement project found that EUCs (such as spreadsheets and databases on individual laptops) were feeding into the General Ledger. While EUCs were not the cause of Freddie Mac’s problems (they were a symptom of insufficient oversight) to have such poor IT governance in such a large company was a serious issue. It turns these EUCs were done in part to streamline the time it took to make changes to their business processes (a common complaint of IT departments in large corporations is that it takes too long to get things done). As such, these EUCs served as a form of “shadow IT” that had not been through a normal rigorous testing process.

Implementation Methodologies

Once a new system is developed or purchased, the organization must determine the best method for implementation. Convincing a group of people to learn and use a new system can be a very difficult process. Asking employees to use new software as well as follow a new business process can have far reaching effects within the organization.

There are several different methodologies an organization can adopt to implement a new system. Four of the most popular are listed below.

- Direct cutover. In the direct cutover implementation methodology, the organization selects a particular date to terminate the use of the old system. On that date users begin using the new system and the old system is unavailable. Direct cutover has the advantage of being very fast and the least expensive implementation method. However, this method has the most risk. If the new system has an operational problem or if the users are not properly prepared, it could prove disastrous for the organization.

- Pilot implementation. In this methodology a subset of the organization known as a pilot group starts using the new system before the rest of the organization. This has a smaller impact on the company and allows the support team to focus on a smaller group of individuals. Also, problems with the new software can be contained within the group and then resolved.

- Parallel operation. Parallel operations allow both the old and new systems to be used simultaneously for a limited period of time. This method is the least risky because the old system is still being used while the new system is essentially being tested. However, this is by far the most expensive methodology since work is duplicated and support is needed for both systems in full.

- Phased implementation. Phased implementation provides for different functions of the new application to be gradually implemented with the corresponding functions being turned off in the old system. This approach is more conservative as it allows an organization to slowly move from one system to another.

Your choice of an implementation methodology depends on the complexity of both the old and new systems. It also depends on the degree of risk you are willing to take.

Change Management

As new systems are brought online and old systems are phased out, it becomes important to manage the way change is implemented in the organization. Change should never be introduced in a vacuum. The organization should be sure to communicate proposed changes before they happen and plan to minimize the impact of the change that will occur after implementation. Change management is a critical component of IT oversight.

Sidebar: Mismanaging Change

Target Corporation, which operates more than 1,500 discount stores throughout the United States, opened 133 similar stores in Canada between 2013 and 2015. The company decided to implement a new Enterprise Resources Planning (ERP) system that would integrate data from vendors, customers, and do currency calculations (US Dollars and Canadian Dollars). This implementation was coincident with Target Canada’s aggressive expansion plan and stiff competition from Wal-Mart. A two-year timeline – aggressive by any standard for an implementation of this size – did not account for data errors from multiple sources that resulted in erroneous inventory counts and financial calculations. Their supply chain became chaotic and stores were plagued by not having sufficient stock of common items, which prevented the key advantage of “one-stop shopping” for customers. In early 2015, Target Canada announced it was closing all 133 stores. In sum, “This implementation broke nearly all of the cardinal sins of ERP projects. Target set unrealistic goals, didn’t leave time for testing, and neglected to train employees properly.”[1]

Maintenance

After a new system has been introduced, it enters the maintenance phase. The system is in production and is being used by the organization. While the system is no longer actively being developed, changes need to be made when bugs are found or new features are requested. During the maintenance phase, IT management must ensure that the system continues to stay aligned with business priorities and continues to run well.

Summary

Software development is about so much more than programming. It is fundamentally about solving business problems. Developing new software applications requires several steps, from the formal SDLC process to more informal processes such as agile programming or lean methodologies. Programming languages have evolved from very low-level machine-specific languages to higher-level languages that allow a programmer to write software for a wide variety of machines. Most programmers work with software development tools that provide them with integrated components to make the software development process more efficient. For some organizations, building their own software does not make the most sense. Instead, they choose to purchase software built by a third party to save development costs and speed implementation. In end-user computing, software development happens outside the information technology department. When implementing new software applications, there are several different types of implementation methodologies that must be considered.

Study Questions

- What are the steps in the SDLC methodology?

- What is RAD software development?

- What makes the lean methodology unique?

- What are three differences between second-generation and third-generation languages?

- Why would an organization consider building its own software application if it is cheaper to buy one?

- What is responsive design?

- What is the relationship between HTML and CSS in website design?

- What is the difference between the pilot implementation methodology and the parallel implementation methodology?

- What is change management?

- What are the four different implementation methodologies?

Exercises

- Which software-development methodology would be best if an organization needed to develop a software tool for a small group of users in the marketing department? Why? Which implementation methodology should they use? Why?

- Doing your own research, find three programming languages and categorize them in these areas: generation, compiled vs. interpreted, procedural vs. object-oriented.

- Some argue that HTML is not a programming language. Doing your own research, find three arguments for why it is not a programming language and three arguments for why it is.

- Read more about responsive design using the link given in the text. Provide the links to three websites that use responsive design and explain how they demonstrate responsive-design behavior.

Labs

1. Here’s a Python program for you to analyze. The code below deals with a person’s weight and height. See if you can guess what will be printed and then try running the code in a Python interpreter such as https://www.onlinegdb.com/online_python_interpreter.

measurements = (8, 20)

print("Original measurements:")

for measurement in measurements:

print(measurement)

measurements = (170, 72)

print("\nModified measurements:")

for measurement in measurements:

print(measurement)

2. Here’s a broken Java program for you to analyze. The code below deals with calculating tuition, multiplying the tuition rate and the number of credits taken. The number of credits is entered by the user of the program. The code below is broken and gives the incorrect answer. Review the problem below and determine what it would output if the user entered “6” for the number of credits. How would you fix the program so that it would give the correct output?

package calcTuition;

//import Scanner

import java.util.Scanner;

public class CalcTuition

{

public static void main(String[] args)

{

//Declare variables

int credits;

final double TUITION_RATE = 100;

double tuitionTotal;

//Get user input

Scanner inputDevice = new Scanner(System.in);

System.out.println("Enter the number of credits: ");

credits = inputDevice.nextInt();

//Calculate tuition

tuitionTotal = credits + TUITION_RATE;

//Display tuition total

System.out.println("You total tuition is: " + tuitionTotal);

}

}

- Taken from ACC Software Solutions. "THE MANY FACES OF FAILED ERP IMPLEMENTATIONS (AND HOW TO AVOID THEM)" https://4acc.com/article/failed-erp-implementations/ ↵